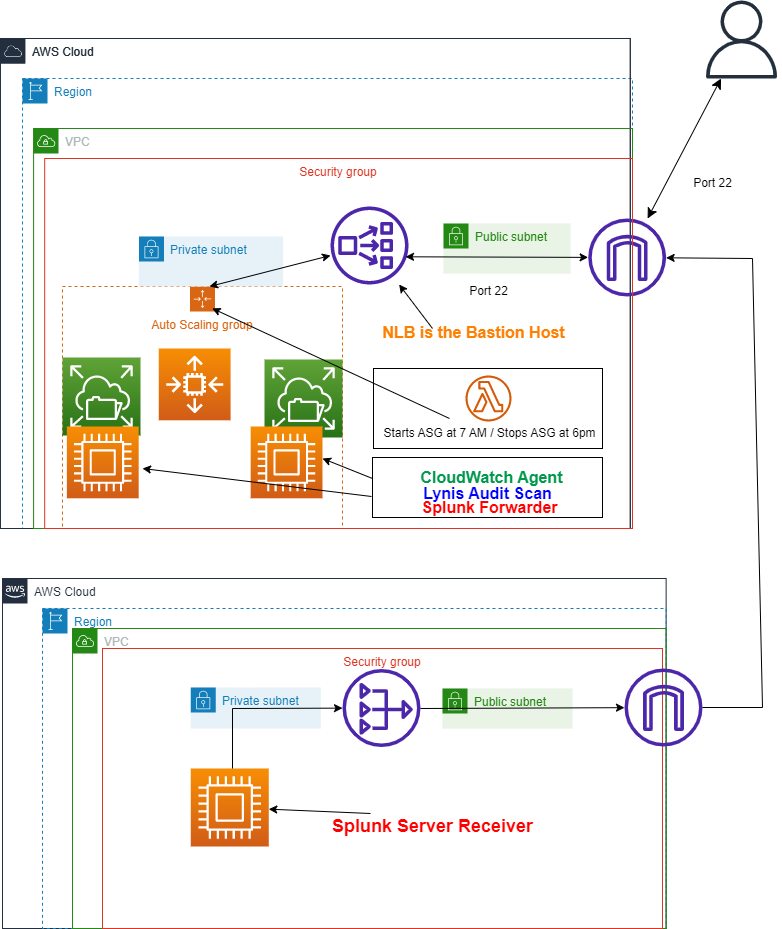

Created a Bastion Host Through a Network Load Balancer

I recently deployed an unconventional bastion host with an internet-facing Network Load Balancer to improve security in an AWS Linux 2 production environment. The bastion host includes a custom AWS launch template with EC2 userdata that installs a CloudWatch agent and Lynis Scan into a private autoscaling group of EC2s. Authorized users can now SSH on port 22 through the Network Load Balancer into the private group of EC2s. Additionally, the EC2s are attached to an Elastic File System for easy file sharing.

When a user logs into the server, a custom warning message appears announcing the terms and conditions of entering the private production environment. The message was from revising the MOTD file in the Linux OS which was configured in the launch template permanently on reboot (and later baked into a golden AMI).

To ensure the system’s security, Lynis scans the EC2s in the group tagged private auto-scaling group every two days at 12 pm EST and stores the logs into an EFS directory using the System Manager Run Command shell script for automation.

Next, a Splunk Forwarder was manually installed into the ASG environment. The Splunk Forwarder on the bastion host’s group of EC2s relays data to the development team’s separate instance operating as a Splunk Server/Receiver, enabling centralized logging.

The development environment’s autoscaling group of EC2s are scheduled by a Lambda Function to auto-start at 7 AM and auto-stop at 6 PM EST to reduce costs and accommodate business hours.

After creating the bastion host environment, the development team requested customizing the CloudWatch Agent to monitor additional metrics for memory and disk space in CloudWatch. An Ansible playbook was created and ran in System Manager to accomplish this task.

Finally, another golden Amazon Machine Image (AMI) version was created from one of the running EC2s in the autoscaling group to ensure future deployments are quick and easy.

By deploying this unconventional bastion host with an internet-facing Network Load Balancer and incorporating additional applications such as Lynis Scan, Splunk Forwarder, and the customized CloudWatch Agent, I improved the security hardening and monitoring capabilities of the development environment while reducing costs with scheduled EC2s.

Userdata Code sample used for Launch Template/Golden AMI

code

#cloud-config

package_update: true

package_upgrade: true

runcmd:

- yum install -y amazon-efs-utils

- apt-get -y install amazon-efs-utils

- yum install -y nfs-utils

- apt-get -y install nfs-common

- file_system_id_1=fs-[XXXXXXXXXXXX]

- efs_mount_point_1=/home-directories

- mkdir -p "${efs_mount_point_1}"

- test -f "/sbin/mount.efs" && printf "\n${file_system_id_1}:/ ${efs_mount_point_1} efs tls,_netdev\n" >> /etc/fstab || printf "\n${file_system_id_1}.efs.us-east-1.amazonaws.com:/ ${efs_mount_point_1} nfs4 nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport,_netdev 0 0\n" >> /etc/fstab

- test -f "/sbin/mount.efs" && grep -ozP 'client-info]\nsource' '/etc/amazon/efs/efs-utils.conf'; if [[ $? == 1 ]]; then printf "\n[client-info]\nsource=liw\n" >> /etc/amazon/efs/efs-utils.conf; fi;

- retryCnt=15; waitTime=30; while true; do mount -a -t efs,nfs4 defaults; if [ $? = 0 ] || [ $retryCnt -lt 1 ]; then echo File system mounted successfully; break; fi; echo File system not available, retrying to mount.; ((retryCnt--)); sleep $waitTime; done;

- amazon-linux-extras enable lynis

- amazon-linux-extras install -y lynis

- yum install ansible -y

- yum install git -y

- yum install amazon-cloudwatch-agent -y

- sudo /opt/aws/amazon-cloudwatch-agent/bin/amazon-cloudwatch-agent-ctl -a start -c default

-sudo /opt/aws/amazon-cloudwatch-agent/bin/amazon-cloudwatch-agent-ctl -a fetch-config -m ec2 -s -c file: amazon-cloudwatch-agent.json

Lynis Scan Shell Script for SSM Run Command Cronjob

code

#!/bin/bash # Set the name of the Auto Scaling Group ASG_NAME="Bastion-Host-ASG" # Set the EFS directory where you want to output the audit reports EFS_DIRECTORY="home-directories/audit_reports" # Get the private IP addresses of the EC2 instances in the Auto Scaling Group that have the Env tag set to Bastion Host INSTANCE_IDS=$(aws ec2 describe-instances --filters "Name=tag:Env,Values=Bastion Host" "Name=instance-state-name,Values=running" --query "Reservations[].Instances[].PrivateIpAddress" --output text) # Run the Lynis scan on each EC2 instance for PRIVATE_IP in $INSTANCE_IDS do lynis audit system --quick > /tmp/lynis-audit.txt > home-directories/Audit_Reports/lynis-audit.txt aws efs create-directory --region us-east-1 --creation-info "Path=$EFS_DIRECTORY" --tags "Key=Name,Value=Audit Reports" > /dev/null 2>&1 aws efs create-mount-target --file-system-id fs-XXXXXXXXXXXX --subnet-id subnet-XXXXXXXXXXXX --security-group sg-XXXXXXXXXXXX --region us-east-1 > /dev/null 2>&1 aws efs put-object --region us-east-1 --body /tmp/lynis-audit.txt --path "$EFS_DIRECTORY/lynis-audit-$PRIVATE_IP.txt" done # run the lynis-scan.sh script at 12:00 pm every other day in Run Command shell script [ 0 12 */2 * * etc/cron.daily/lynis/lynis-scan.sh ]

Lambda Function to Auto Start EC2s

code

# Must have IAM permissions for the Lambda function to interact with EC2 instances

# Ensure you have properly set up your Lambda function trigger.

import boto3

import logging

# Setup simple logging for INFO

logger = logging.getLogger()

logger.setLevel(logging.INFO)

# define the ec2 connection

ec2 = boto3.resource('ec2')

def lambda_handler(event, context):

# Use the filter() method of the instances collection to retrieve

# all running ec2 instances.

filters = [{

'Name': 'tag:AutoOn',

'Values': ['True']

},

{

'Name': 'instance-state-name',

'Values': ['stopped']

}

]

# filter the instances collection

instances = ec2.instances.filter(Filters=filters)

# locate all stopped instances

StoppedInstances = [instance.id for instance in instances]

# print the instances for logging purposes

# print the StoppedInstances

# Make sure there are stopped instances to start-up.

if len(StoppedInstances) > 0:

# perform the start-up

startingUp = ec2.instances.filter(InstanceIds=StoppedInstances).start()

print("Starting Up Instances...")

else:

print("Nothing to see here")

return {

'statusCode': 200,

'body': 'Lambda function executed successfully.'

}Lambda Function to Auto Stop EC2s

code

# Must have IAM permissions for the Lambda function to interact with EC2 instances

# Ensure you have properly set up your Lambda function trigger.

import boto3

import logging

# Setup simple logging for INFO

logger = logging.getLogger()

logger.setLevel(logging.INFO)

# define the ec2 connection

ec2 = boto3.resource('ec2')

def lambda_handler(event, context):

# Use the filter() method of the instances collection to retrieve

# all running ec2 instances.

filters = [{

'Name': 'tag:AutoOff',

'Values': ['True']

},

{

'Name': 'instance-state-name',

'Values': ['running']

}

]

# filter the instances collection

instances = ec2.instances.filter(Filters=filters)

# locate all running instances

RunningInstances = [instance.id for instance in instances]

# print the instances for logging purposes

# print the RunningInstances

# Make sure there are running instances to shut down

if len(RunningInstances) > 0:

# perform the shutdown

shuttingDown = ec2.instances.filter(InstanceIds=RunningInstances).stop()

print("Shutting Down")

else:

print("Nothing to see here")

return {

'statusCode': 200,

'body': 'Lambda function executed successfully.'

}Custom MOTD For Pro-Core Plus Development Environment

code

#!/bin/bash cat <<'EOF' >> /etc/motd ___ ___ ___ _ / _ \_ __ ___ / __\___ _ __ ___ / _ \ |_ _ ___ / /_)/ '__/ _ \ / / / _ \| '__/ _ \ / /_)/ | | | / __| / ___/| | | (_) / /__| (_) | | | __/ / ___/| | |_| \__ \ \/ |_| \___/\____/\___/|_| \___| \/ |_|\__,_|___/ * * * * * * * W A R N I N G * * * * * * * * * * This computer system is the property of ProCore Plus. It is for authorized use only. By using this system, all users acknowledge notice of, and agree to comply with, the Acceptable Use of Information Technology Resources Policy (“AUP”). Unauthorized or improper use of this system may result in administrative disciplinary action, civil charges/criminal penalties, and/or other sanctions as set forth in the AUP. By continuing to use this system you indicate your awareness of and consent to these terms and conditions of use. LOG OFF IMMEDIATELY if you do not agree to the conditions stated in this warning. * * * * * * * * * * * * * * * * * * * * * * * * EOF

Custom CloudWatch Agent Ansible Playbook to Collect Memory and Disk Space Metrics

code

---

- hosts: all

become: true

vars:

tasks:

- name: Download CloudWatch agent installer

get_url:

url: https://s3.amazonaws.com/amazoncloudwatch-agent/amazon_linux/amd64/latest/amazon-cloudwatch-agent.rpm

dest: /tmp/amazon-cloudwatch-agent.rpm

- name: Install CloudWatch agent

shell: rpm -U /tmp/amazon-cloudwatch-agent.rpm

- name: Configure CloudWatch agent to collect memory and disk space metrics

copy:

content: |

{

"metrics": {

"namespace": "CWAgent",

"metrics_collected": {

"mem": {

"measurement": [

"used_percent"

],

"metrics_collection_interval": 60

},

"disk": {

"measurement": [

"mem_used_percent",

"inodes_free"

],

"metrics_collection_interval": 60,

"resources": [

"*"

]

}

}

}

}

dest: /opt/aws/amazon-cloudwatch-agent/etc/amazon-cloudwatch-agent.jsonRalph Quick Cloud Engineer

Ralph Quick is a professional Cloud Engineer specializing in the management, maintenance, and deployment of web service applications and infrastructure for operations. His experience ensures services are running efficiently and securely meeting the needs of your organization or clients.